This is a continuation of my deep-dive into understanding print quality issues when printing over USB with Octoprint, and adding buffer monitoring to Marlin.

Now that I had an objective mechanism to measure planner underruns, which we know is the likely cause of print quality issues, we can attempt to mitigate the issue.

My previous investigation with Octoprint's comm.py which manages the communication with the printer indicated that the default behaviour is to wait for an ok from the printer before sending the next command, which means that the command buffer is usually empty by the time the next command reaches the printer.

Generally, the planner buffer is usually kept full and does not usually underrun for the scenarios I have tested (apart from curves on Cura 4.7.1), however any potential delay which could be introduced by e.g. CPU load, resends (noise on USB cable) – can cause planner buffer underruns.

Making Octoprint send multiple commands inflight

The core algorithm to keep the command buffers full is as follows:

- Check if the printer is reporting available capacity in the command buffer

- Trigger Octoprint to send more commands

So at the minimum, we need a way to detect the available capacity in the command buffers, and a mechanism to trigger Octoprint to send more commands. We know we can use Marlin's ADVANCED_OK output for undersanding the available buffer capacities, we need to figure out a mechanism to trigger sends.

Octoprint's core logic for sending commands is inside comm.py's _send_loop, which is running in a thread, and checks for _send_queue to have something to send, and once command has sent, waits for _clear_to_send.wait() which is a CountedEvent / mutex mechanism that lets other threads tell the thread that it's cool to send another command.

_clear_to_send.set() in another thread is ultimately what causes the next command to be sent, so it looked like a good mechanism to start.

I wanted to add this buffer-filling functionality as a plugin because I feel a tad uncomfortable with introducing significant changes to comm.py, so I began with a rough naive plugin that inspects the ADVANCED_OK output and calls _clear_to_send.set() if there's capacity.

Turns out, this was too naive as the plugin would react to responses that do not reflect the current state of the buffers, and it doesn't know which lines it triggered, so it would cascade into serial buffer overruns extremely quickly. I also discovered the ok buffer size that determines the maximum _clear_to_send can buffer needs to be at least 2, otherwise calling _clear_to_send.set() won't do anything if _clear_to_send is already at 1.

My next attempt would keep track the number of commands inflight and use this as a basis to determine whether or not _clear_to_send.set() should be called, as added a minimum delay between triggering a send, and this worked pretty well considering the amount of code required:

ADVANCED_OK = re.compile(r"ok (N(?P<line>\d+) )?P(?P<planner_buffer_avail>\d+) B(?P<cmd_buffer_avail>\d+)")

LINE_NUMBER = re.compile(r"N(?P<line>\d+) ")

class BufferMonitorPlugin(octoprint.plugin.StartupPlugin):

# ok buffer must be above 1

def __init__(self):

self.bufsize = 4

self.max_inflight = self.bufsize

self.last_cts = time.time()

self.last_sent_line_number = 0

def on_after_startup(self):

self._logger.info("Hello World!")

def gcode_sent(self, comm, phase, cmd, cmd_type, gcode, *args, **kwargs):

self.last_sent_line_number = comm._current_line

def gcode_received(self, comm, line, *args, **kwargs):

if "ok " in line:

matches = ADVANCED_OK.search(line)

if matches.group('line') is None:

return line

line_no = int(matches.group('line'))

buffer_avail = int(matches.group('cmd_buffer_avail'))

inflight = self.last_sent_line_number - line_no

if inflight > self.max_inflight:

# too much in flight, scale it back a bit

comm._clear_to_send.clear()

self._logger.info("too much inflight, chill a bit")

self._logger.info("Buffer avail: {} inflight: {} cts: {}".format(buffer_avail, inflight, comm._clear_to_send._counter))

if buffer_avail >= 1 and (time.time() - self.last_cts) > 0.5 and inflight < self.max_inflight:

self._logger.info("sending more")

queue_size = comm._send_queue._qsize()

self._logger.info("Buffer avail: {} inflight: {} cts: {} queue: {}".format(buffer_avail, inflight, comm._clear_to_send._counter, queue_size))

self.last_cts = time.time()

comm._clear_to_send.set()

return line

I was able to confirm with buffer monitoring via M576 that it fills the buffers and decreases underruns. Graphs further below.

Note: I discovered that my Ender 3 v2 takes at minimum 9ms to respond to a command, where the timing was measured by inspecting the serial.log of Octoprint. This means that the default behaviour of waiting for an ok to send the next command was capped at ~111 commands a second, and I know that my Cura 4.7.1 sliced 3DBenchy will easily spike to 160 commands a second, which will most definitely cause underruns.

Making a plugin in Octoprint

Now that the core idea has proven to be workable, I began refining the plugin logic and testing with both dry-run prints and real prints to determine reliability.

I followed the Octoprint plugin guide and developed the plugin against a Docker container on my local machine for speed, but ran into different behaviour with the Virtual Printer that comes with Octoprint, so I could only really test the plugin against my Ender 3 v2 on my Octopi setup.

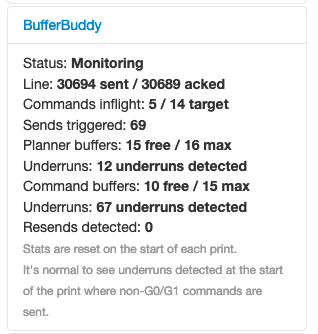

I called the plugin "BufferBuddy" after some deliberation cause the working title "buffer-filler.py" sounded a bit shit.

I ran into issues getting the plugin to load initially which eventually turned out to be a Python version specification issue. Once this was sorted, I was able to copy across the core logic from my prototype and began fleshing it out.

Understanding how to implement a UI took probably three times as long as implementing the core logic, which was hampered by needing to restart Octoprint to see changes. However, I eventually managed to make the UI behave the way I wanted!

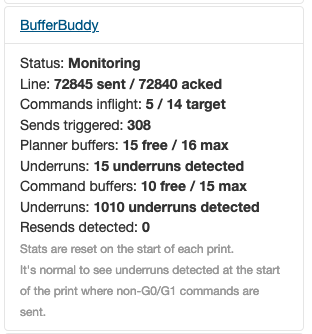

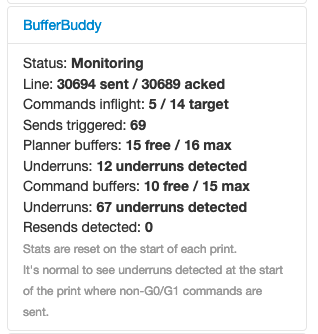

Introducing BufferBuddy

It's probably easiest to explain the impact of the plugin with graphs.

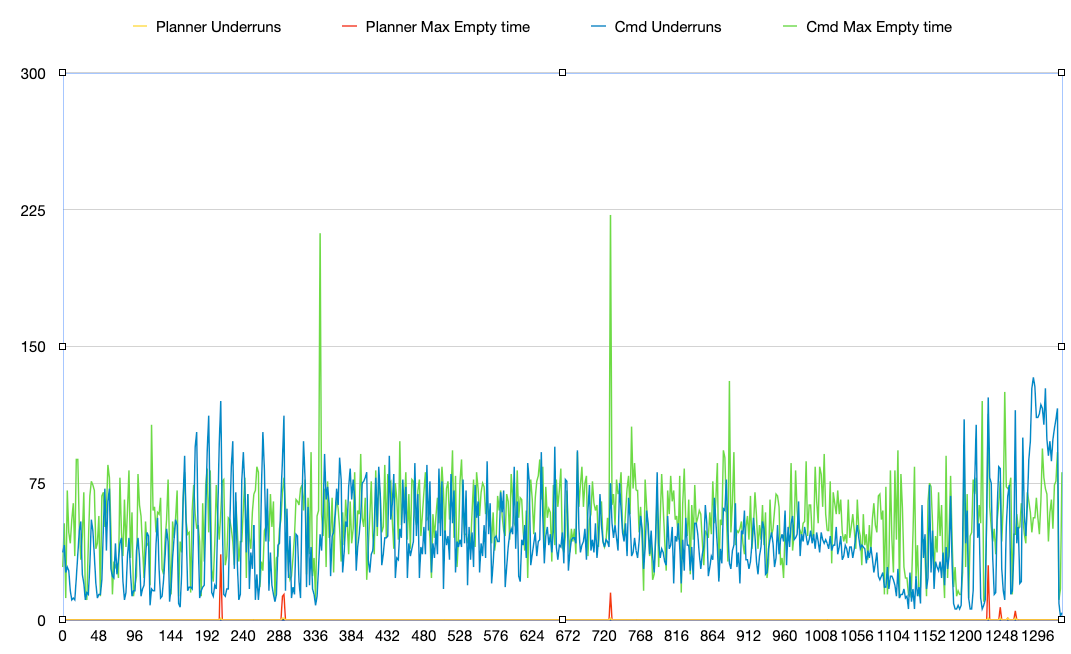

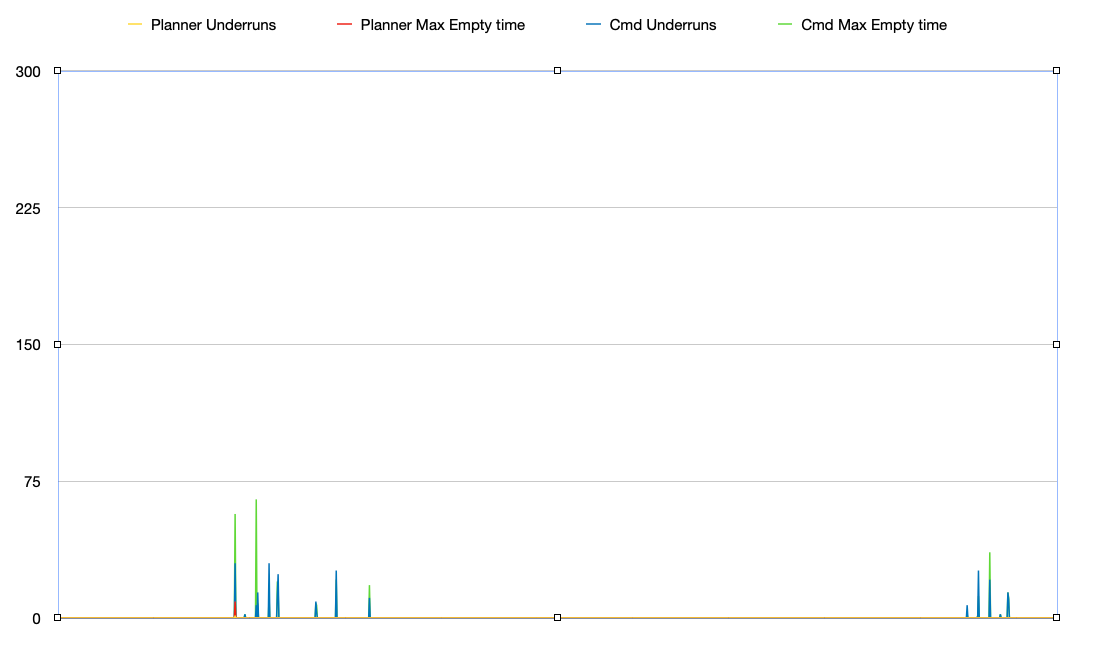

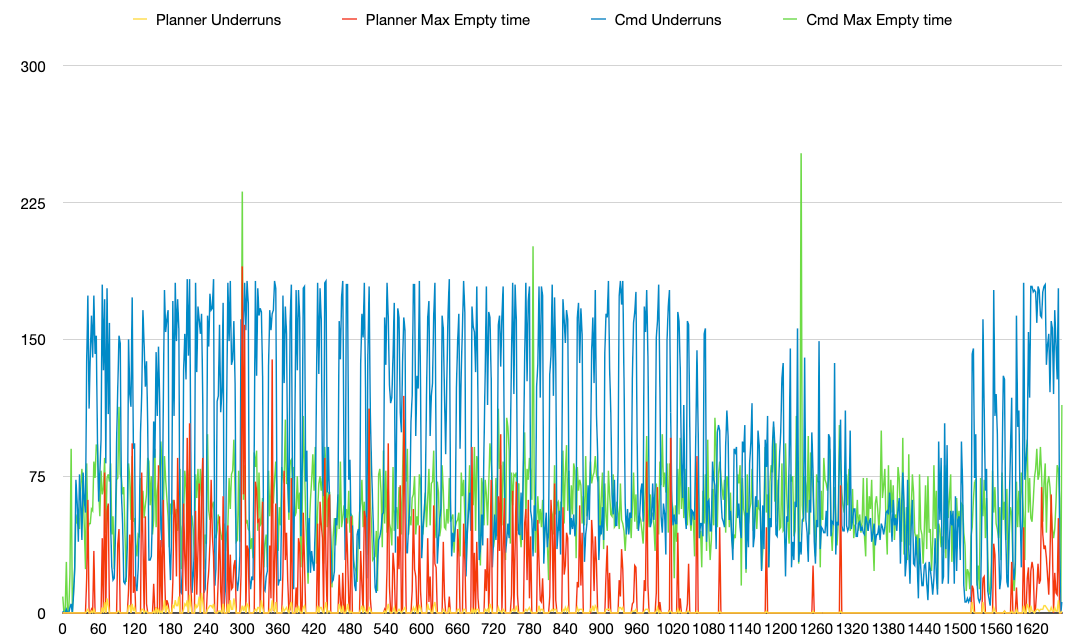

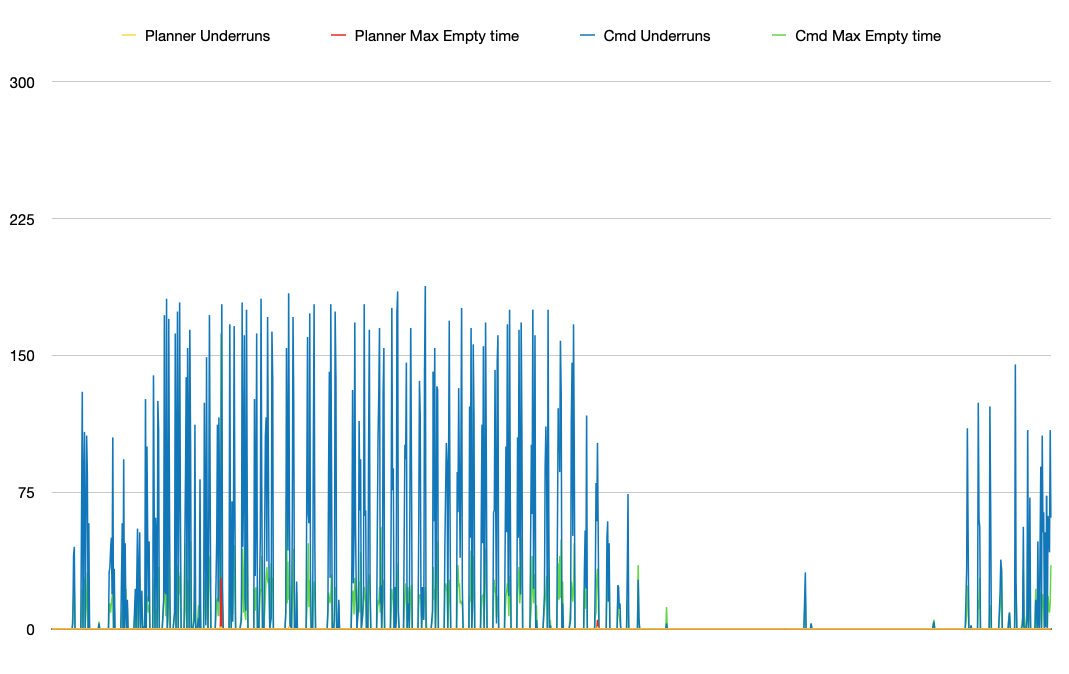

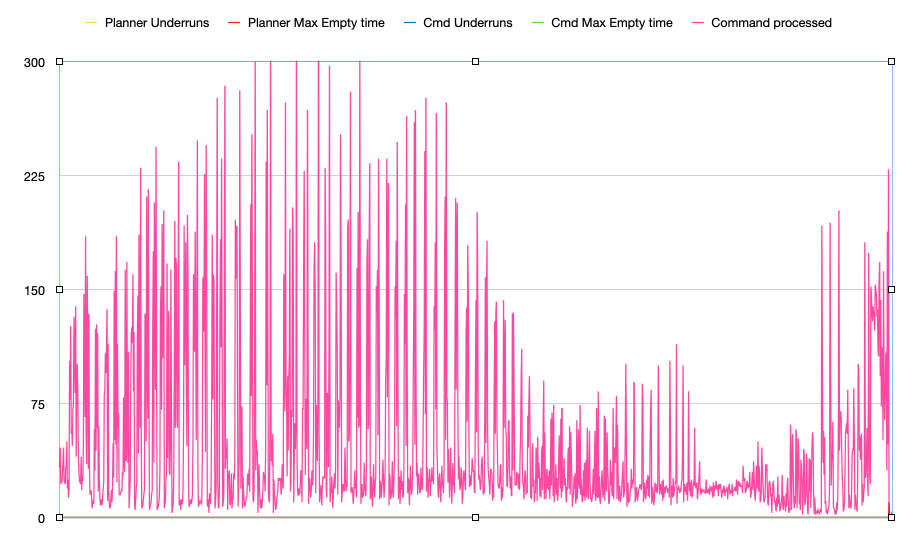

The graphs below are from graphing M576 output during a print of a 50% scale 3DBenchy sliced using Cura 4.6.2 and 4.7.1, and with BufferBuddy enabled/disabled, with the leading underrun artifacts caused by the purge line removed for clarity.

My printer is an Ender 3 v2 running Smith3D's Marlin fork which has improvements for the Ender 3 v2's LCD, with my own patches for M576, with BUFSIZE=16, BLOCK_BUFFER_SIZE=16, USART_RX_BUF_SIZE=64, USART_TX_BUF_SIZE=64.

The graphs indicate that we consistently see command buffer underruns, but only see ~6 instances of planner buffer underruns, where the maximum detected period that the planner buffer was empty was under 50ms.

With BufferBuddy enabled, command buffer underruns are mostly eliminated, with 13 instances of command underruns during print, and one planner buffer underrun with a max underrun period of 9ms.

For Cura 4.7.1, which we know produces problematic gcode, it's another story.

It shows severe planner underruns, with delays easily going above 75ms. Commands per second easily surpasses 150 per second, which is above the max throughput of ~111 per second which we calculated by measuring command/ack latency above.

BufferBuddy eliminates most of the planner buffer underruns, but there is still command buffer underruns due to the sheer gcode density, although it halves the maximum delay the command buffers remain empty.

Uploading to SD appears to behave differently with respect to having multiple lines inflight for more throughput, as the command buffer never gets filled, and it seems to be more dependent on serial RX buffer size which we can't easily detect, so this needs more work.

Actual Print Quality

So, now we know that we've significantly mitigated planner underruns with BufferBuddy, we can now see if we've resolved print quality issues with Cura 4.7.1 on an actual print.

Turns out Octoprint can detect when I print from SD from the Ender 3's interface, so I was able to get a "control" print with best case scenario for buffer filling.

Printing from SD showed zero planner and command underruns during the print (tiny smidge at the end probably due to built-in print completion commands), but commands per second peaking above 300 shows that it's going to be extremely hard to keep buffers filled due to latency when it's super dense gcode.

Printing of SD is a little bit better in the middle, but still exhibits over-extrusion on curves. The motors seem to make odd sounds during these curves, which is likely related.

For comparison, this is a 3DBenchy from Cura 4.6.2:

Putting aside that iPhone cameras aren't amazing at capturing the detail I want (handheld, at least), the Cura 4.6.2 sliced Benchy looks pretty good by comparison still.

Summary.. so far

So, it looks like BufferBuddy doesn't fully address the Cura 4.7.1 problem, but it still mitigates against potential planner underruns by keeping the command buffers full, which still should help against the occasional blip of load on lower-powered devices.

Want to check out the plugin? It's on my Github, but it's still considered experimental and may cause your printer to lock up.

Comments